Implementing blue/green deployments using Kubernetes and Envoy

Maximizing system uptime and reducing risk via advanced deployment strategies

Streak’s infrastructure started out on Google’s AppEngine and served us well for many years. Before I joined the company, Streak had outgrown what AppEngine could provide and made the move to the full GCP platform with our infrastructure hosted on GKE (Google Kubernetes Engine).

Deployment was fairly simple: we push our main branch to GitHub, CircleCI picks up the change, builds and tests everything, then pushes the docker image to GCR (Google Container Registry). Our deployment.yaml file had some lines like this:

containers:

- image: gcr.io/ourproject/imagename:abcd123Here, abcd123 is the short commit hash for the new image.

The old way

To deploy to production, a developer would edit this file, change the commit hash to the latest, and run

kubectl apply -f deployment.yamlThis triggered a process that was largely hands off. Kubernetes picks up the change and starts transforming the deployment into the new desired state. At the time, the deployment ran at a fixed size of 150 pods. By modifying the commit hash, Kubernetes applies what’s known as a “rolling update”, which is governed by a few strategies. At the time we had:

strategy:

rollingUpdate:

maxSurge: 10

maxUnavailable: 10Since we’re not increasing the size of the deployment, but rather replacing pods in the fixed size deployment, the maxUnavailable setting is most relevant. 10 pods begin the termination process. This isn’t instant; our deployment handles both API requests that complete as quickly as possible as well as processing asynchronous tasks. The vast majority of those tasks are handled as quickly as API requests, but a small percentage are longer running when they need to process large volumes of data. We want to give those time to complete, but still force a shutdown if it takes longer than necessary. That is handled by another setting:

terminationGracePeriodSeconds: 700This allows Jetty to gracefully shut down if it’s able, otherwise Kubernetes will forcefully terminate the pod after 700 seconds (just under 12 minutes) which is more than enough for even the longest tasks1. Our API sits behind Google Cloud Load Balancer which performs SSL termination and routes traffic to the service associated with the deployment. The service keeps track of which pods are ready via the readiness probe, so live traffic is only routed to pods which are online and serving.

The end result was 150 pods shutting down 10 at a time which, in the absolute worst case scenario, takes the full 700 seconds each before being replaced with 10 new pods, resulting in a new deploy taking up to 3 hours to go out. In practice, the worst case never occurred and most deploys were in the 20 to 40 minute range.

Typically this wasn’t an issue. We could still deploy many times throughout the day. The downside is that if you had a critical bug that you needed to fix, it would take much longer for it to reach all pods unless you resorted to force terminating the pods, which should be a last resort as you are also terminating active user requests and background tasks.

Blue-green deployments

The concept behind the blue-green deployment strategy is that you have your existing deployment (the “blue” one) and you want to release a new deployment (the “green” one). Rather than doing a replacement of your running code, you can temporarily run each one in parallel. This allows you to:

quickly scale up the new deployment without being dependent on simultaneously scaling down the old deployment

keep both around for a while in case there’s a critical issue in the new deployment so you can swap back

increase flexibility in what you deploy since once you have the infrastructure for splitting traffic between two deployments, you can choose to split traffic in additional ways

The last point on splitting traffic is where you can do some interesting things.

Design goals

We decided to go with Envoy sitting between Google Cloud Load Balancer and our service. Envoy is a proxy originally created by Lyft and counts among its users Amazon AWS, Google, Netflix, Stripe, and many others. It’s what allows us to control routing of traffic at a more granular level than what we had before. We had a number of goals in the new design:

Speed up the deploy process from approximately 30 minutes to as fast as possible

Have the ability to quickly rollback a bad deployment

Support the use of canary deployments. Like the proverbial canary in the coalmine, a canary deployment allows you to test changes to a small subset of users before rolling out to everyone. There were a few types we wanted:

Deploy to some % of our traffic. For example, 1% or 10%. This lets us do things like make performance tweaks and measure how the new code performs in production.2

Deploy to specific customers by their domain name.

Deploy to specific users by email address.

Deploy standalone canaries for independent testing.

All the above must work correctly with no dropped requests

Additionally, it was a good opportunity to explore autoscaling. Rather than a fixed number of nodes having a fixed cost, we’d like the deployment to scale automatically based on load. During peak hours, the deployment should scale to as many pods as is required to handle traffic. For off-peak hours, there’s no sense paying for pods which are sitting idle, so the deployment should scale down to a reasonable minimum.

The scaling targets are based on two metrics:

CPU utilization. Most of our workload is I/O intensive, involving fetching from some cache, datastore, or remote API, then doing permission checks, performing minimal transformations of the data, then responding with the data. Our servers don’t deal with HTTPS encryption and decryption (that’s handled for us by Google Cloud Load Balancer), but for the occasional request that does consume significant CPU resources we want to ensure we have some headroom.

Thread utilization. We’ve configured each pod in our deployment with 40 threads for handling requests. When all 40 threads are busy, additional requests get added to a queue until a thread becomes free. Each request may spawn its own threads to do parallel I/O for example, but those are independent.

We set a target of 50% for each. In practice, we almost never see high CPU utilization for the reasons mentioned above. The goal with thread utilization is that by running at 50% capacity, we are able to quickly handle spikes up to double our current request volume before the new capacity goes online.3

For some quick back of the envelope math, if we’re running a fixed 150 pods with each pod having 40 threads for responding to requests, that’s 6000 simultaneous threads. If a typical API request takes 100ms to complete, we can handle 60,000 requests per second before we would need to scale up.

Horizontal Pod Autoscaler

As with non-cloud server architecture, you can scale any given service both horizontally and vertically. Vertical scaling means that if you’ve hit capacity while running on a fixed number of servers with 2 CPU and 8GB of RAM, you can upgrade the specs of those same number of servers to 4 CPU and 16GB of RAM. If that’s not enough, you move to 8 CPU / 32 GB and so on.

Horizontal scaling is where you hit capacity and you just keep adding more servers with the same specs as before. Kubernetes does this via the Horizontal Pod Autoscaler (HPA). The way it works is via a YAML file describing how you want Kubernetes to scale a given resource, such as a deployment. The important bits are:

minReplicas— for a system serving up production traffic, you never want to scale down to a single replica, so this is where you set your reasonable minimum that is capable of handling the transition from off-peak to regular traffic.maxReplicas— on the opposite end, you want to control your costs and be aware of any issues that are causing unexpectedly high utilization. Setting the maximum number ensures that you don’t scale beyond this number.4scaleTargetRef— this is where you specify what thing the HPA needs to scale up or down. In our case, this iskind: Deploymentandname: <deployment name>metrics— here you specify your metric(s) that Kubernetes monitors as well as the target type and value.

For our thread metric, we set the target at 500m, which can be read as “500 milli” and is equivalent to a value of 0.5 or 50%. The official Kubernetes site has a great writeup of how it works, the scaling algorithm, and more.

Setting up Envoy

The simple description of how Envoy works is that it operates on clusters and routes. Think of a cluster as essentially where you want to send requests to. A route then describes how to specify which request goes to which cluster. In our case, the cluster identifies that we want to send API traffic to our Kubernetes deployment and the route identifies which paths on the API to send. The simplest route is to simply send everything to one single cluster:

routes:

- match:

prefix: "/"

route:

cluster: apiHowever, for blue/green deployments we have the concept of a primary API and a secondary API. The primary represents the current, active (blue) deployment and the secondary is then the new (green) deployment. Envoy has a concept of a weighted cluster which looks like this:

routes:

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: secondaryapi

weight: 10

- name: primaryapi

weight: 90

This will direct 10% of traffic to the new deployment while directing 90% of traffic to the old deployment. As the new deployment comes online and more and more pods are ready, we can gradually increase the weight of the secondary cluster and reduce the weight of the primary cluster.

But how do we know when the new deployment is ready to serve traffic? And how do we correctly update Envoy’s configuration?

Weighing traffic

Kubernetes provides a nice way to get the current status of a deployment. Let’s say we just deployed our API with a target of 80 replicas. Running:

kubectl get deployment api-deployment -o yamlReturns output which includes some status information:

status:

availableReplicas: 19Given that our example target is 80 replicas, we know that 19/80 or 23.75% are ready to serve traffic. We round this down to the nearest integer, 23, and use that as the weight for the secondary API. Since Envoy’s weighted clusters must add to 100, the weight of the primary API is then 77. We then update our Envoy configuration with these values and the route looks like:

routes:

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: secondaryapi

weight: 23

- name: primaryapi

weight: 77

Envoy supports updating its runtime via a symbolic link swap, which is the only way to atomically modify a file. To support this, we store all our Envoy configuration files within a single Kubernetes configmap file and it’s trivial to overwrite the configmap with the new contents. To convert this into a link swap in the Envoy deployment, we make use of a sidecar container running Crossover which watches for changes in the configmap and, when it detects changes, it reads the contents of the configmap and outputs the new file contents to the filesystem, then performs a link swap on each. Envoy listens for link swaps and then applies the changes to its runtime environment.

By checking the status of the new deployment in a loop every few seconds, our deploy script keeps updating the deployment percentage as new pods become ready to serve traffic, and Kubernetes and Envoy handle the rest. Once we reach 100%, we promote the secondary to primary, and the deploy is done.5

This allows us to quickly roll out a new full deployment where the end goal is the new deployment having 100% of the traffic as well as deploying to some developer-specified percent of traffic, where we update Envoy until we reach the target percent.

Routing by email or domain

In addition, we can route requests based on the user’s email address or domain name. This is accomplished via changing how routes match. For normal requests, our UI appends ?email=user@example.com to API queries. Additionally, requests that end up creating asynchronous tasks append a header value X-Streak-Request-Email with the email address. We can easily match both of these with the following match definitions:

- match:

prefix: "/"

query_parameters:

name: "email"

string_match:

safe_regex:

regex: {{DOMAIN_OR_EMAIL_REGEX}}

route:

# (route definition omitted)

- match:

prefix: "/"

headers:

name: "X-Streak-Request-Email"

safe_regex_match:

regex: {{DOMAIN_OR_EMAIL_REGEX}}

route:

# (route definition omitted)This is a template to which we perform variable substitution, replacing {{DOMAIN_OR_EMAIL_REGEX}} with the appropriate email address or domain regex.

Rolling back a bad deploy

Another of our design goals was to quickly roll back a bad deployment. Our deploy script supports a swap command. Implementing this is easy. We first check that the old deployment hasn’t scaled down too far and, if it hasn’t, we simply update our Envoy cluster definition and switch the endpoint for the primaryapi and secondaryapi. Each cluster’s definition looks something like this simplified example:

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: primaryapi

type: STRICT_DNS

dns_lookup_family: V4_ONLY

lb_policy: LEAST_REQUEST

load_assignment:

cluster_name: primaryapi

endpoints:

- lb_endpoints:

endpoint:

address:

socket_address:

address: {{PRIMARY_CLUSTERIP_SERVICE}}.default.svc.cluster.localAgain, we do templated variable substitution here and update the Kubernetes configmap, which gets picked up by Crossover, which performs a link swap, which gets picked up by Envoy and we’ve swapped back in seconds.

Deploy script

We can perform deploys using a simple command line script. Here are the current suite of deploy options the deploy command supports:

In addition, we can also cancel, check the status of a deployment, or swap back to the previous deploy via other commands.

Testing

While this all sounds good in theory, with all these moving parts how can we verify that Google Cloud Load Balancer’s interaction with Envoy and its routing of traffic to a Kubernetes service, which then hits our API deployment is functioning correctly given that we’re also introducing Horizontal Pod Autoscaling into the mix?

Enter Tsung

Tsung is Erlang-based software designed for load testing. To use it, you provide a configuration file describing the traffic you want to send. In my testing, I used a simple config:

<tsung loglevel="info" dumptraffic="protocol" >

<clients>

<client host="localhost" use_controller_vm="true" maxusers="1000000" />

</clients>

<servers>

<server host="api.streak.com" port="443" type="ssl" weight="100" />

</servers>

<load>

<arrivalphase phase="1" duration="1" unit="minute">

<users arrivalrate="5" unit="second" />

</arrivalphase>

<arrivalphase phase="2" duration="1" unit="minute">

<users arrivalrate="10" unit="second" />

</arrivalphase>

<arrivalphase phase="3" duration="1" unit="minute">

<users arrivalrate="15" unit="second" />

</arrivalphase>

<arrivalphase phase="4" duration="1" unit="minute">

<users arrivalrate="20" unit="second" />

</arrivalphase>

<arrivalphase phase="5" duration="1" unit="minute">

<users arrivalrate="25" unit="second" />

</arrivalphase>

<arrivalphase phase="6" duration="30" unit="minute">

<users arrivalrate="30" unit="second" />

</arrivalphase>

</load>

<sessions>

<session name="consume-thread" probability="80" type="ts_http">

<request>

<http url="/path/to/threadSleep" method="GET" version="1.1">

<www_authenticate userid="YOUR_API_KEY_HERE" passwd="" />

</http>

</request>

</session>

<session name="hello-world" probability="20" type="ts_http">

<request>

<http url="/path/to/hello" method="GET" version="1.1">

<www_authenticate userid="YOUR_API_KEY_HERE" passwd="" />

</http>

</request>

</session>

</sessions>

</tsung>

The “load” section describes a timeline of traffic to be sent:

initial ramp-up phase sending 5 new users per second for 1 minute

increasing to 10 per second for 1 minute, then 15 per second, 20 per second, 25 per second

then sustaining at 30 new requests per second for 30 minutes

The “sessions” section defines what kind of traffic to send:

80% of requests are to a “threadSleep” endpoint which ties up the request for 5 seconds

20% of requests are to a “hello” endpoint which returns a simple “hello world” response

As the majority of requests consume the thread for 5 seconds and we are sending many new users per second, this nicely simulates a gradual increase in traffic which lets the HPA scale up the deployment so that, over time, we are serving hundreds of simultaneous users.

A successful test of Envoy, the new deployment system, and Horizontal Pod Autoscaling will mean that out of all the requests we send, 100% of the requests will result in a successful response with zero timeouts and zero dropped requests.

Results

I’ll quote from the report I produced after implementing this:

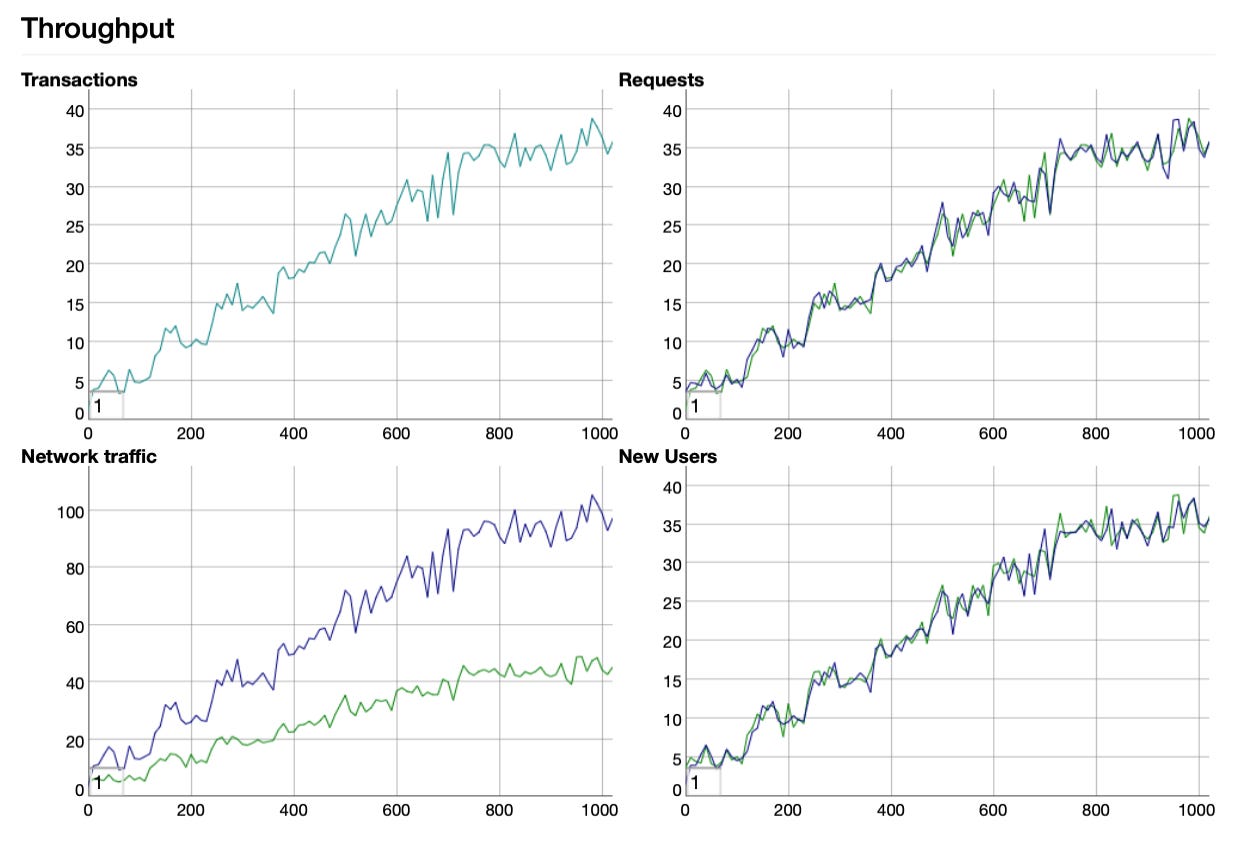

The combination of redeployment plus horizontal pod autoscaling has been shown to be successful. This was accomplished by using the tool Tsung to direct traffic at the test Envoy instance to ramp up load prior to performing a new deployment, gradually cutting over traffic from the old to the new deployment as pods initialized and became available, then switching entirely to the new deployment.

Highlights:

20,865 requests sent (80% taking 5 seconds, 20% returning “hello world” immediately)

100% of requests succeeded with a 200 OK HTTP status code. Zero server failures scaling up, during cutover, or after cutover.

The only problems were errors connecting to the API which was limited to 10 connections (out of 20K+, representing 0.05% of requests attempted) and was likely due to being on a wifi connection from my laptop.

Some Tsung-generated graphs in the report shows that as it scaled up the number of user requests over time, the API responses kept pace when scaling up and during cutover:

Conclusion

The change in how we deploy our API has been very successful as demonstrated by comprehensive testing as well as the thousands of deployments we’ve done since this system has been introduced. Deployment times were reduced from an average of 30 minutes to now taking roughly 3 minutes. Additionally, we now support a number of new blue/green strategies that give us additional flexibility when we want to test out changes in production for a single user, entire team, or for some chosen percentage of all traffic.

Lastly, because our API now scales up and down dynamically via the use of Horizontal Pod Autoscaler, our GCP bill has benefited from the lower utilization during off-peak hours:

Engineering at Streak

We work on many interesting challenges affecting billions of requests daily involving many terabytes of data. For more information, visit https://www.streak.com/careers

It’s possible that if you’re processing millions of items (such as a data migration), some task could take longer. However, where this is possible we chunk those into smaller batches and either run them in parallel or re-enqueue the task to handle the next chunk.

We also have a comprehensive experiment system that allows us to run different code paths based on whether the user or entire account is on the experiment, which we can also set as percentage based. This is usually the preferred method as it outlives any one deployment, but sometimes you’re almost certain a change is an improvement so this is another tool in the toolbox for when that makes sense.

In practice, scaling is fast but not instant. Kubernetes needs to bring new nodes online, then spin up any DaemonSets for each node, then bring the desired pods online, plus any initialization that occurs on the pod itself.

If you do hit the maximum, it’s important to have good monitoring and alerting in place so you can take action. This is a blog post of its own.

There are a few other things we do to clean up deployments. We update the HPA on the old deployment to set its minimum autoscale to 1, and it gradually scales down over time. Additionally, we delete the previous secondary API so we have the current primary deployment N, the secondary N-1, but there’s no need to keep the N-2 deployment, its HPA, or the associated Kubernetes service.